My on-going notes on the topic…

Modern SOA Coordination, Transactions, Business Activities, Orchestration and Choreography

Modern SOA solutions are composed of several services, sometimes structured in layers from the most elementary services to complex business services.

There are cases where the technologies and frameworks used to build them are different. This is a major benefit but also complexity that demands technology to manage it.

One important field of study is how services exchange messages. This field brought some patterns know as Message Exchange Patterns.

Message Exchange Patterns – MEPs

The most basic exchange patterns are Request/Response, Fire-And-Forget and Solicit-Response.

The Request/Response is the basic pattern where a consumer emits a request message to a provider and receives the response back with the result of its request.

The Fire-And-Forget pattern is used when a consumer doesn’t require or care about the response of an operation. It emits the message and goes one with its life.

The Solicit-Response is the inverse of the Request/Response pattern.

Complex MEPs

By using groups of the previous patterns complex MEPs are created. One complex MEP that is popular is the Publish-Subscribe MEP. It this pattern a party contacts another one requesting it to be notified when a given event (also known as topic) happens. The second party, when the event happens, will go through its list of subscribers and publish a notification to them.

Service Activities and Coordination

A business process is composed by multiple steps in multiple services. A service activity is any service interaction required to complete business tasks.

In a business process the order of activities is important; there are constraints limiting when an activity can be initiated or concluded. This introduces contextual information in the runtime environment so that it can keep track of the process state.

WS-Coordination is WS-* standard describing a protocol to introduce and manage this contextual information. It is based on the coordinator service model:

- Activation Service - Creates contexts and associates them to activities.

- Registration Service - Where participant services register to use contextual information from a certain activity and a supported protocol.

- Coordinator - The controller service that manages the composition.

- Protocol-Specific-Services - WS-Coordination is a building block for other protocols like WS-AtomicTransactions. These protocols require specific services for managing details not covered on the WS-Coordination standard.

WS-AtomicTransaction

WS-AtomicTransaction is a coordination type, an extension to use with the WS-Coordination context management framework.

A service participates in an atomic transaction by first receiving a coordination context from the activation service, after that it is allowed to register for the available transaction protocols.

The primary transaction protocols are:

- Completion protocol to initiate the commit or abort states.

- Durable2PC protocol for services representing permanent data repositories.

- Volatitle2PC protocol for services representing volatile data repositories.

An atomic transaction should be as short as possible in terms of duration. For the time it lasts there will be resources locked and concurrent requests will have to wait. Naturally the scalability of the application is greatly influenced by this.

Two Phase Commit Protocol Basic algorithm

Commit-request phase

1. The coordinator sends a query to commit message to all transaction participants and waits until it has received a reply from all of them.

2. Each participant executes the transaction up to the point where it has to decide to commit or abort.

3. It replies with an agreement message (votes Yes to commit), if the transaction succeeded, or an abort message (No, not to commit), if the transaction failed.

Commit phase

Success

If the coordinator received an agreement message from all participants during the commit-request phase:

1. The coordinator sends a commit message to all the cohorts. 2. Each cohort completes the operation, and releases all the locks and resources held during the transaction. 3. Each cohort sends an acknowledgment to the coordinator. 4. The coordinator completes the transaction when acknowledgments have been received.

Failure

If any cohort sent an abort message during the commit-request phase:

1. The coordinator sends a rollback message to all the cohorts. 2. Each cohort undoes the transaction using the undo log, and releases the resources and locks held during the transaction. 3. Each cohort sends an acknowledgement to the coordinator. 4. The coordinator completes the transaction when acknowledgements have been received.

Business Activities

Business Activities manage long-running service activities. They do not support rolling back operations and are different from atomic transactions in the way they deal with error. It is not possible to hold locks on data to ensure ACID on these interaction patterns.

Business Activities deal with concurrency and errors by providing alternative business logic to reverse previously made changes to the system's state.

WS-BusinessActivity is the WS-* protocol for these interaction patterns.

On top of this Orchestration allows business logic to be expressed in a standardized way using services. This is the role of WS-BPEL but is out of the scope of this talk.

Distributed Transactions in the WCF way

WCF is able to propagate transactions across the service boundary. This feature is known as transaction flow.

Transaction flow must be enabled at the binding in both communication sides to work.

<bindings> <netTcpBinding> <binding name="netTcpWithTransactions" transactionFlow="true" /> </netTcpBinding> </bindings>

Distributed transactions do not require reliability in the transport but enabling it reduces the number of transactions aborted by timeout (caused by lost messages).

<bindings> <netTcpBinding> <binding name="netTcpWithTransactions" transactionFlow="true" > <reliableSession enabled="true" /> </binding> </netTcpBinding> </bindings>

The transaction flow is configured per service operation with the TransactionFlow attribute:

· Allowed – The operation will accept incoming transactions.

· NotAllowed – The operation will not accept incoming transactions.

· Mandatory – The operation will only work if there is an incoming transaction.

Transaction flow is not allowed for one way calls (the client would not be able to abort the transaction).

Supported Transaction Protocols

WCF Supports the following list of transaction protocols:

· Lightweight – Used inside the same AppDomain.

· OleTx – Used to propagate across AppDomain, process boundaries and machine boundaries. It uses RPC calls in a format that is Windows specific. When crossing over the internet it can cause problems because it uses ports that typically closed.

· WS-AtomicTransactions – Use to propagate across AppDomain, process boundaries and machine boundaries. Unlike OleTx it can cross the internet because it is HTTP based, supported by SOAP extensions.

The bindings that support transactions are designed to switch to the “best” (lighter) protocol depending on the operation conditions.

Transaction Managers

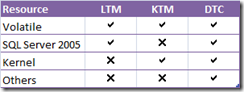

Associated with each transaction protocol and with a resource kind there is a transaction manager:

· LTM – Lightweight transaction manager manages transactions inside a single AppDomain and when there is only one opened connection in the transaction. If two connections are opened in the same transaction and AppDomain DTC is used. In SQL Server 2008 this is not true and LTM can be used with multiple opened connections in the same AppDomain.

· KTM – Is specific to Vista and manages kernel resources that support transactions.

· DTC – Distributed Transaction Coordinator. Manages both OleTx and WS-AT transactions.

WCF assigns the appropriate transaction manager; it starts at the lightest possible. When new resource managers enlist in the transaction, WCF can promote the transaction to a next level manager. Once promote there is no going back, it will run elevated until abort or commit.

Ambient Transaction

The ambient transaction is the transaction in which the current code executes. It is available in the static property Transaction.Current. It is stored per thread.

Local Transaction and Distributed Transaction

The Transaction object is used both for distributed and local transactions. There are two identifiers available in the Transacton object. LocalIdentifier and DistributedIdentifier. The local is always assign, but the DistributedIdentifier is created when the TransactionManager is promoted to a DTC Transaction Manager.

Transactional Service Development

As mentioned by the book Programming WCF Services. WCF provides both explicit and implicit transaction programming modes. The explicit mode is used when the transactional objects are created explicitly in the code. The implicit mode is used when the code is marked with special attributes.

When TransactionScopeRequired property of the the OperationBeahavior attribute is marked as true a transaction object is made available; either by using a transaction that is flowing through the execution chain or by providing a new one.

What will actually happen depends on the way the Transaction Flow is configured. The following picture summarizes the available options:

- In the Client/Service mode the service will use the client transaction if possible. When it is not available it will create a service side transaction.

- In the Client mode the service only uses the client transaction.

- In the Service mode the service always has a transaction and it must differ from any transaction the client may or may not have.

- In the None mode the service never has a transaction.

WCF manages almost every aspect of transactions except for the fact that it does not know if it should abort or commit. For that intervenient parties most vote to either abort or commit.

The voting can be configured declaratively with the TransactionAutoComplete property in the OperationBehavior attribute. In this case WCF will vote commit if there are not errors (exceptions) in the operation.

The other option is explicit voting. In this case the operation must call the SetTransactionComplete method in the Operation Context. It must do so if there are no errors and it must do it only once. A second call would raise an InvalidOperationException.

Isolation Modes (enumeration in System.Transactions)

· Unspecified

· ReadUncommited

· ReadCommited

· RepeatableRead

· Serializable

· Chaos

· Snapshot

A short summary of the main concurrency effects

- Lost Updates - When different operations select the same row to update based on the value originally selected.

- Dirty Read - Actions dependent on a certain row can follow wrong paths based on values that have not been committed. The data can be modified before being committed leading the system to a state that violates business rules.

- Non-repeatable read - Several reads to the same row contain different values because those are being modified by other transactions.

- Phantom reads -Reading a set of rows contains rows that will be deleted on commit. Those rows will not come up again.

How to analyze what locks are in place at a given instant?

Use windows performance counters.

Use sql profiler.

Query sys.dm_tran_locks.

Use the EnumLocks API.

How to discover long running transactions

Query the system table sys.dm_tran_database_transactions.

Important rules to minimize deadlocks

- Access objects in the same order.

- Avoid user interaction in transactions.

- Keep transactions short and in one batch.

- Use a lower isolation level.

- Use a row versioning-based isolation level.

- Set READ_COMMITTED_SNAPSHOT database option ON to enable read-committed transactions to use row versioning.

- Use snapshot isolation.

- Use bound connections.

Enable snapshot and row versioning

Read committed isolation using row versioning is enabled by setting the READ_COMMITTED_SNAPSHOT database option ON. Snapshot isolation is enabled by setting the ALLOW_SNAPSHOT_ISOLATION database option on. When either option is enabled for a database, the Database Engine maintains versions of each row that is modified. Whenever a transaction modifies a row, image of the row before modification is copied into a page in the version store.